When the European Data Protection Supervisor (EDPS) unveiled its revised guidance on Generative AI and Data Protection in October 2025, it sent a clear message: Europe’s AI future will be built not just on innovation, but on verifiable trust. The guidance — aimed formally at EU institutions but highly relevant to the private sector — brings long-needed clarity to how generative systems should align with data protection law. For companies operating in or with Europe, it marks the start of a new era in risk management, transparency, and accountability.

This article explores what the new EDPS guidance means for organisations, how it intersects with the EU Artificial Intelligence Act (AI Act), and what practical steps boards and compliance officers should take to integrate both into their governance frameworks.

This article is written by Mourad Seghir ([email protected]) & Lorena Velo ([email protected]). Mourad and Lorena are part of RSM Netherlands Business Consulting Services with a specific focus on Supply Chain and Strategy matters.

Executive summary (what changed and why it matters now)

- What’s new from the EDPS. On 28 October 2025, the European Data Protection Supervisor (EDPS) released an updated set of guidelines on Generative AI and data protection for EU institutions. While addressed to EU bodies under the EUDPR (Reg. 2018/1725), the guidance raises concrete controls and guardrails that private sector controllers can adopt by analogy (e.g., stronger documentation, cautions on web scraping, bias/accuracy testing, and practical ways to honor data subject rights with GenAI).

- How this meets the AI Act. The EU AI Act imposes a lifecycle risk management system for high-risk AI (Art. 9) and data & data governance controls (Art. 10) on providers/deployers, plus transparency duties for certain “limited risk” systems (Art. 50)—notably disclosure when users interact with AI and labelling of deepfakes/synthetic media. Using the EDPS’ practical controls is an effective way to operationalize these obligations inside corporate governance, procurement and model operations.

- Business upside. Adopting the EDPS control set and aligning with the AI Act:

- lowers regulatory, litigation and reputational exposure.

- speeds up safe adoption (clear acceptance criteria for vendors and internal builds).

- produces durable evidence of accountability that boards, auditors and supervisors increasingly expect.

The EDPS control themes you can use today

Although drafted for EU institutions, the EDPS’ companion Guidance for Risk Management of AI systems turn high-level principles into practical actions that corporate teams can adopt without waiting for harmonised standards. Five themes dominate:

- Risk management across the lifecycle. The EDPS recommends an ISO31000style process with a recurring risk register, risk matrix and treatment plan—and shows where risks arise across the AI lifecycle (from inception through retirement). See the risk matrix (p.8) and the AI lifecycle diagram (p.10) to structure your control points and signoffs.

- Interpretability & explainability are prerequisites. Treat “blackbox” behavior as a risk. The guidance calls for architecturelevel documentation, rationale for model choices, explainability methods (e.g., SHAP/LIME), and limits of use—so users, auditors and affected individuals can understand outcomes.

- Fairness and data quality first. Specific risks and countermeasures cover bias from poor data quality, sampling/historical bias, overfitting and algorithmic bias, plus practical audits and metrics to detect and mitigate them. Annexes include metrics and checklists by lifecycle phase that you can port into your model cards and validation templates.

- Accuracy (two meanings) and drift. The EDPS differentiates legal “accuracy” of personal data from statistical accuracy of models and recommends validation against edge cases and drift detection with retraining triggers.

- Security & rights by design. Measures cover training data leakage (e.g., inversion/membership inference), API leakage, storage breaches, as well as realistic paths to honour access, rectification and erasure in complex models (e.g., metadata retention, retrieval tools, machine unlearning or output filtering where unlearning is not viable).

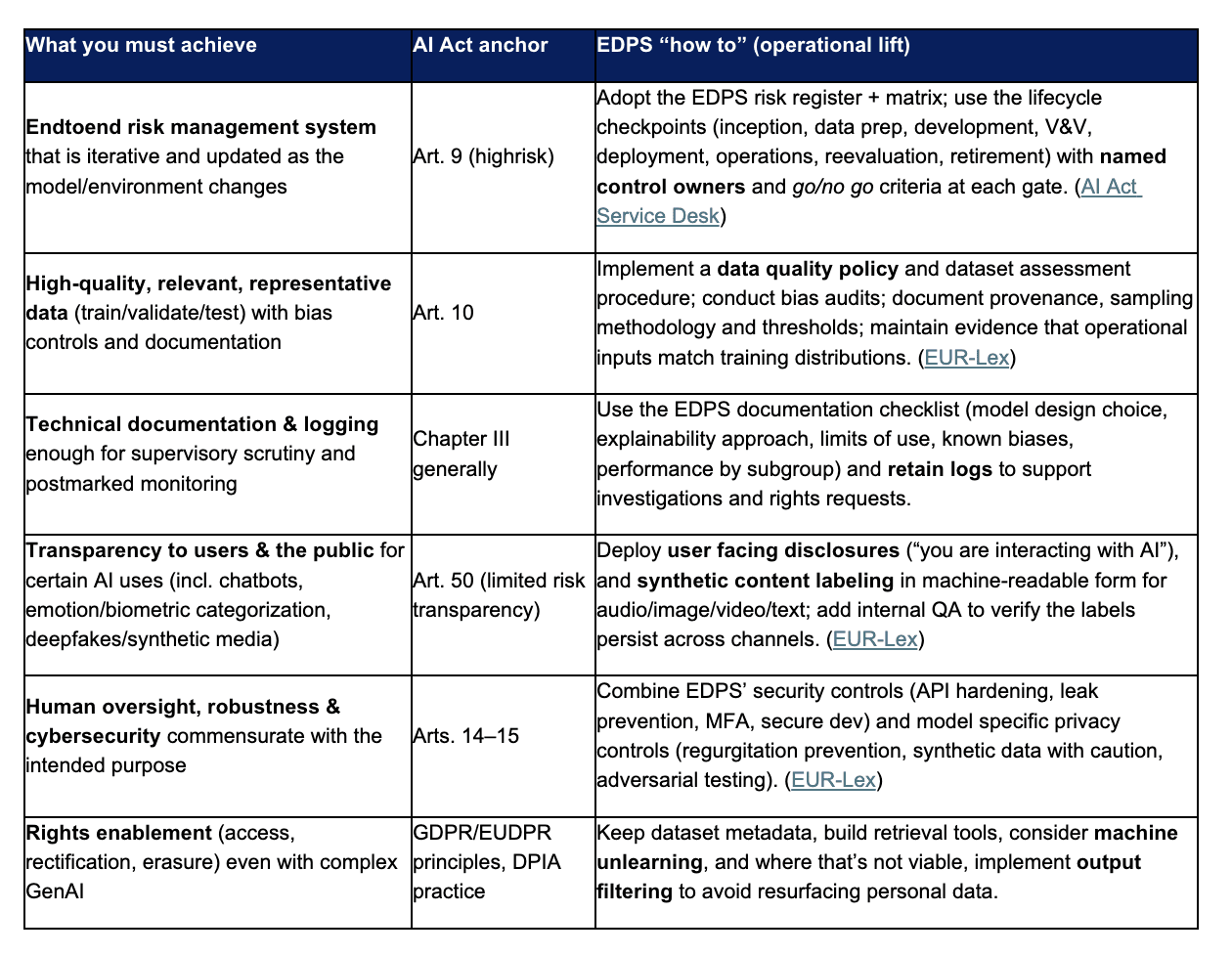

This helps with the AI Act because the EDPS measures map neatly to AI Act Chapter III (Arts. 9–15) obligations on risk management, data governance, technical documentation/logging, transparency to users, human oversight and robustness/cybersecurity.

Where the AI Act and EDPS guidance intersect (and what to implement)

Below is a practical mapping you can embed in your AI policy and control framework.

Note on scope: the EDPS generativeAI guidance is formally aimed at EU institutions under the EUDPR; private companies operate under the GDPR. The underlying principles (fairness, data minimisation, accuracy, security, transparency and accountability) and the AI Act’s lifecycle duties are broadly aligned, so the EDPS operational measures are a pragmatic way to meet both regimes in one framework.

90-Day AI Action Plan for Boards, Compliance & Risk (RSM NL – Practical Summary)

Days 0–30 — Baseline & Governance

Begin by mapping all AI use cases—including “shadow AI”—and classifying each against AI Act risk levels. Assign clear functional owners (Product, Data, Security, Compliance/DPO) and a Board sponsor. Adopt the EDPS lifecycle as your delivery model, embedding the risk matrix and checklists into procurement, model cards and release gates. Enforce a strict data-source rule: no scraping of personal data without confirmed legality, minimization and documented provenance.

Days 31–60 — High-Impact Controls

Build your Art. 10 data-governance pack: QA criteria, representativeness checks, bias metrics, drift monitoring and retraining triggers. Define your explainability standard, including preferred methods and minimum user-facing clarity. Harden AI security through RBAC, throttled APIs, encryption, secure coding and regurgitation-prevention. Activate transparency controls by surfacing AI-interaction notices and applying robust, machine-readable synthetic-content labels across all channels.

Days 61–90 — Evidence & Scaling

Complete a full DPIA/AI risk assessment with logs, dataset QA, validation and user-testing evidence. Establish a GenAI rights playbook covering intake, retrieval, error/erasure handling and—where feasible—machine unlearning, with output filtering as a fallback. Launch quarterly Board reporting with an AI risk dashboard tracking key risks, mitigations, drift/bias alerts, incidents and supplier status.

Build vs. buy: supplier due diligence you can copypaste into your RFPs

When procuring GenAI or analytics, ask vendors to provide (and make acceptance contingent on) the following, mirroring EDPS recommendations and AI Act Chapter III:

- Technical dossier & model card: architecture, training approach, known limits, subgroup performance, explainability method, humanoversight model, acceptable use constraints.

- Data governance evidence (Art. 10): data sources & provenance, representativeness testing, bias audits and remediation, drift monitoring strategy and retraining policy. (EUR-Lex)

- Security: modelintegrity controls, API hardening, leakage testing results, incident response plan.

- Transparency controls (Art. 50): how synthetic content is marked (machinereadable) and how user interaction disclosures are surfaced; testing evidence for robustness of labels in your channels. (EUR-Lex).

- Rights handling: tools for data retrieval, rectification/erasure paths (including feasibility of machine unlearning) and how they will support your DPIA.

The EDPS guidance explicitly calls out the risk of unclear information from AI providers and lists what to demand upfront to avoid operating a black box; bring those items directly into your contracts and acceptance tests.

Forward thinking

As Europe shifts from aspirational principles to enforceable AI practice, transparency is becoming the clearest indicator of organisational maturity. Article 50 of the AI Act makes this visible: users must know when they are interacting with AI, synthetic content must be persistently and machine-readably labelled across all formats, and any use of emotion recognition or biometric categorization must be explicitly disclosed. Implementing these duties is no longer a matter of adding notices to a chatbot or watermarking media—it requires embedding transparency into design, content pipelines and QA, and treating it as a standing control rather than a one-off compliance exercise.

For leadership teams, this is where the next phase of AI governance begins. Boards will be expected not just to approve AI strategies, but to interrogate whether the organisation can evidence trustworthy AI in day-to-day operations. The following oversight questions should shape your next meeting agenda:

- Inventory & classification: “Do we know where GenAI is used across the business (including ‘shadow AI’), and how each use case maps to AI Act risk categories and privacy risk?”

- Data governance maturity: “Can we evidence Article 10 controls—data sources, representativeness, bias metrics and drift monitoring—for our most material models?”

- Transparency & brand risk: “Are our chatbot disclosures and synthetic-content labels active, machine-readable and robust in every channel?”

- Rights readiness: “If a data subject asks for access or erasure today, can we process it for GenAI outputs and training data—and what is our plan where unlearning is not feasible?”

- Evidence of accountability: “Do we maintain a complete AI technical file for each system—risk register, validations, logs and change history—that would withstand auditor or supervisory scrutiny?”

These questions mark the path forward. Organisations that can answer them with confidence will not only meet Europe’s emerging regulatory expectations but also differentiate themselves through verifiable trust—turning compliance into a durable advantage in the next chapter of AI adoption.

RSM is a thought leader in the field of Supply Chain Management and Strategy matters including AI developments. We offer frequent insights through training and sharing of thought leadership based on a detailed knowledge of industry developments and practical applications in working with our customers. If you want to know more, please contact one of our consultants.