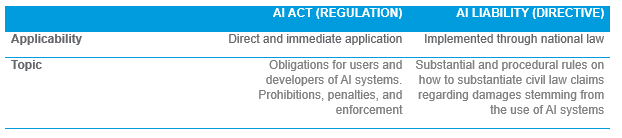

The use of artificial intelligence (AI) is becoming increasingly prominent in usage of most industries. International Data Corporation, for instance, found that the AI market (including software, hardware and services) is expected to reach half a trillion USD in 2023 worldwide. In consequence, given its anticipated significant impact on society, the EU Commission has presented two proposals to regulate the development and use of AI, namely, the Artificial Intelligence Act (regulation) and the Artificial Intelligence Liability (Directive).

THIS ARTICLE IS WRITTEN BY CEM ADIYAMAN AND MOURAD SEGHIR. CEM ([email protected]) AND MOURAD ([email protected]) BOTH HAVE A STRONG FOCUS ON LAW & TECHNOLOGY WITHIN RSM NETHERLANDS BUSINESS CONSULTING SERVICES.

Initial thoughts

The European Union (EU) is recognizing the immense potential of artificial intelligence (AI) in areas like healthcare, sustainability, public services, and economic competitiveness. However, the EU is also cognizant of the risks associated with AI, such as bias, discrimination, and privacy concerns. In response, the EU has put forth proposals aimed at ensuring that the use of AI systems within its borders is secure, reliable, and respects fundamental rights.

One of the primary objectives of these proposals is to foster responsible innovation while maintaining a level playing field for all stakeholders. The EU intends to achieve this by striking a delicate balance between the benefits and risks associated with AI, with a keen focus on prioritizing human welfare and fundamental rights. Moreover, the EU aims to position itself as a global leader in technical progress, without compromising on its core values.

The introduction of the AI Liability Directive is a notable step taken by the EU to discourage regulated actors from engaging in unsafe practices. By establishing clear and harmonized liability rules, the directive aims to hold accountable those responsible for any harm caused by AI systems.

The EU's regulatory approach towards AI reflects a comprehensive understanding of the complex challenges and ethical implications associated with its commercial use. Over a series of articles, RSM will delve into not only the intricacies of the EU's regulatory landscape for AI but also the ethical considerations surrounding its responsible deployment for commercial purposes.

Upcoming AI legislation in Europe: scope

The EU's proposed Artificial Intelligence Act aims to regulate high-risk AI systems in the region. Defining what constitutes a high-risk AI system is still under debate, but the potential to impact fundamental rights is a key factor. For instance, an AI system used in recruitment that autonomously screens and filters candidates, making decisions and predictions, could perpetuate discriminatory practices and harm fundamental rights on a large scale if unsupervised.

Under the proposed Act, the primary regulatory burden falls on the provider, the entity responsible for developing the high-risk AI system. They must obtain certification of conformity, ensuring compliance with requirements related to human oversight, risk management, transparency, and data governance.

Users of these high-risk systems, such as businesses, also have obligations. They must follow the developers' instructions and implement human oversight measures indicated by the provider. If users control the input data, they must ensure its relevance to the system's intended purpose. Continuous monitoring for potential risks to fundamental rights and prompt reporting of incidents or malfunctions to the provider or distributor are required. Users should maintain system logs for an appropriate period and perform data protection impact assessments based on the provider's instructions to evaluate and mitigate risks associated with data processing.

If businesses that use high-risks AI fail to comply with their obligations, they could be subject to penalties of up to EUR 20’000.000 or 4% of its total worldwide annual turnover (whichever is higher). In addition, failing to comply with the obligation to monitor the system and perform adequate controls over the input data; triggers a legal presumption against the business using the high-risk AI system. Indeed, according to the new proposed EU Directive on AI non-contractual liability, the causal link between the damage and the fault from the business using a high-risk AI system will be presumed, in the case of a claim for damages against a non-compliant user of a high-risk AI system.

How to prepare

The European AI Act, expected to be effective by the end of 2024, calls for organizations to take early steps towards compliance. The Act applies to both public and private entities, regardless of their location within or outside the EU, making compliance a necessity for nearly all organizations involved with AI systems in the Union.

To foster responsible and ethical AI practices, AI system providers should commence preparations by identifying and assessing the presence and usage of AI systems within their organization. They should also allocate specific roles to oversee the responsibility of AI systems and establish a framework for continuous risk management review.

Compliance with the AI Act predominantly relies on self-assessment, and the associated costs are estimated to triple compared to those of GDPR compliance. EU impact assessments suggest that small and medium-sized businesses may face compliance expenses of up to €160,000 if their AI systems adhere to all relevant regulations. Adhering to ethical data usage and privacy principles is no longer a mere checkbox exercise but has become a vital business imperative. While compliance may lead to increased costs, it also presents an opportunity for organizations to distinguish themselves among customers who prioritize ethical data practices.

To navigate the AI Act and the AI Liability Directive successfully, organizations are advised to adopt a proactive approach to risk management. This involves delineating AI systems within the organization, assigning diversified roles and responsibilities, implementing continuous risk management reviews, and establishing interdisciplinary controls and cross-functional reporting lines. Compliance not only showcases a commitment to ethical data usage and privacy principles but also enables firms to differentiate themselves in the marketplace.