As artificial intelligence (AI) continues to advance rapidly, businesses across every sector are exploring how to use it to improve efficiency and reduce costs.

But as we barrel ahead at full speed, executives must stay alert to the very real risks AI presents to their business. From the exposure of intellectual property to costly customer-facing errors, failing to establish guardrails around AI use can have serious consequences.

It is comparable to flying a plane as it’s being built or driving a high-performance car without any safety features – there’s a lot that can go wrong, and fast.

While every business wants to make the most of new technology and stay ahead of the curve, boards and leadership teams must approach AI risks like any other threat that has the potential to damage or even sink their business.

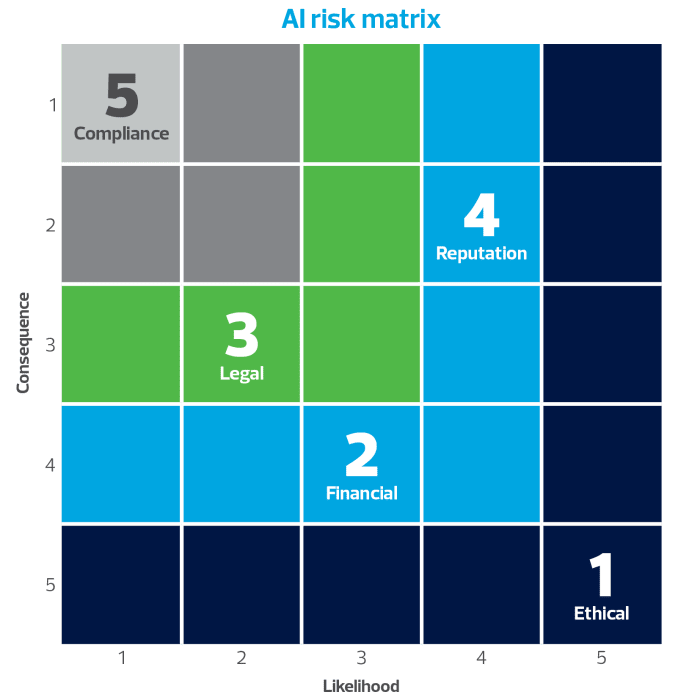

This is where an AI risk matrix can prove highly valuable and serve as a foundation for structured risk-based decisions surrounding AI.

Adapting traditional risk frameworks for AI threats

In approaching AI-related risks, we don’t need to reinvent the wheel. Traditional risk frameworks and tools provide solid methods to identify, assess and manage risk, and these can be adapted to address the unique challenges presented by AI.

One such tool is a risk matrix. Organisations typically use risk matrixes to:

- Identify and assess potential risks.

- Compare the likelihood and impact of risks side by side.

- Prioritise which risks need the most attention.

- Guide decision making and resource allocation.

- Support transparent discussions about risk tolerance

Due to their visual nature, a risk matrix makes it easier to compare the likelihood and consequence of different risks so leaders can focus their attention where it’s needed most. Risk matrixes provide a clear structure for effective risk management in anything from day-to-day operations to large scale strategic projects, and that same approach can be adapted to address the new and evolving threats posed by AI.

How an AI risk matrix helps

An AI risk matrix provides a clear view of the scale, likelihood, and potential impact of AI-related risks – whether they involve data, privacy, ethics, operations, compliance, security, reputation, or any other commercial aspect.

In the first instance, we can use an AI risk matrix to identify and assess inherent risk. This shows the level of exposure an organisation faces before any safeguards are in place. We can also use the risk matrix once controls are identified to understand the level of risk that remains.

Depending on how effective the controls are, AI risks may be substantially reduced or only partially mitigated. This visual comparison helps executives and boards see where risks sit in relation to the organisation’s risk appetite, so they can decide which ones need further action or ongoing monitoring.

Step-by-step guide to building an AI risk matrix

Building an AI risk matrix starts with:

- Identifying the risks associated with AI systems.

- Assessing their likelihood and impact.

- Plotting them in a visual format.

Begin with inherent risks (the exposure before any controls), determine appropriate mitigations, and map any remaining risks. This will help decision makers prioritise actions and resources while maintaining a clear overview of both operational and strategic AI risks.

1. Identify AI risk sources

This step lays the groundwork for assessing and managing AI risks by knowing exactly where the potential problems originate. It allows the AI risk matrix to accurately reflect the organisation’s exposure and implement controls where they matter most.

AI risks can arise from two main sources. These include the following:

Externally-sourced AI technology, such as ChatGPT or CoPilot. Risks could include data privacy or inaccurate outputs.

Internally developed AI solutions. We can further segment these systems into four main types – each with its own set of risks. The four types are:

- Generative AI, which uses large language models like ChatGPT and CoPilot.

- Machine learning models, which operate according to programming rules.

- AI agents, which are systems designed to perceive their environment, learn from it, and make decisions before taking action. These are typically task-focused and operate within defined parameters. For example, AI agents replacing call centre staff to handle routine customer queries.

- Agentic AI, which refers to more advanced systems that act with a degree of independence and strategic intent. Often described as artificial beings, they’re capable of pursuing broader objectives rather than responding to specific prompts. While still in early stages of development, agentic AI has potential applications in complex or high risk settings, such as hazardous robotics or military operations.

Some of the risks that could arise from internally developed AI solutions include:

- Hallucination – AI generating incorrect or misleading outputs.

- Bias – models reflecting prejudices in training data.

- Data poisoning – deliberate contamination of training data, including through corporate espionage.

- Privacy risks – AI inadvertently exposing personal or confidential information.

Using a risk matrix allows us to break down all risks by source and type, and rate them based on two criteria: what the AI is capable of doing within the environment, and the potential impact if something goes wrong.

2. Assess and categorise your AI risk exposures

Risks can be categorised to make the matrix actionable. Common categories include operational, ethical, security, and legal or compliance risks.

Each risk is then assessed by likelihood and impact to produce a severity rating that helps prioritise attention. Clear categorisation ensures resources are directed to the highest risk areas while keeping the matrix easy to understand and follow.

3. Visualise risks using a clear matrix

Visualisation helps teams spot where risks are highest and how effectively the controls reduce exposure. Pay particular attention to sensitive areas such as personal data, commercial information, and intellectual property.

Keep the approach practical: guide staff behaviour instead of relying on overly complex controls and involve experts for any risks that require deeper assessment.

Integrating AI controls and risk mitigation strategies

Once we understand the risks an AI system presents, the next step is to determine and implement appropriate controls. Effective strategies usually include policies, training, and tools to manage exposure across people, process, and technology.

Examples of core controls include:

- Establishing AI policies and clearly communicating them to staff.

- Conducting safe use training, similar to cyber security programs.

- Web filtering to restrict access to public AI tools, such as ChatGPT.

- Implementing security tools to prevent improper use of unauthorised AI applications (often called shadow AI).

- Deploying cloud access security brokers (CASB) to prevent confidential data from being uploaded to public AI platforms.

A layered approach is generally most effective, where we combine these measures to cover multiple points of potential risk.

In developing controls, it’s important to balance innovation and risk management. For example, fully fenced AI systems protect sensitive information but can be very limiting in terms of the scope of internal data. Using publicly available AI solves this problem, but it must be accompanied by robust cyber controls. The key is to weigh the pros and cons and establish a strategy that allows the organisation to innovate safely.

Equally important to the success of any risk management exercise is buy-in from senior management and the board. A strong culture of AI responsibility begins at the top, and this is necessary to ensure staff take the controls seriously.

Government and industry standards

A range of government and industry frameworks have been developed to guide the safe and responsible use of AI. To meet regulatory obligations, organisations need to align AI practices with the relevant frameworks and embed them into policies, processes, and controls.

Regular audits and self-assessments help to ensure compliance, while clear documentation of AI decision making maintains transparency.

Once again, senior management and board oversight are essential for building a culture of accountability and reinforcing responsible AI use, with the ultimate goal to encourage innovation while effectively managing risk.

Federal government

- Australia’s AI Ethics Principles – guides responsible AI design, development, and use.

- Policy for the responsible use of AI in government – guides agencies to deploy AI responsibly and in line with certain standards.

- Voluntary AI Safety Standard – 10 guidelines for safe AI deployment.

State governments

- NSW: Artificial Intelligence Assessment Framework – mandatory self-assessment for government AI projects.

- VIC: Generative AI guidance – supports safe and responsible AI use in the public sector.

Private sector

- NIST AI Risk Management Framework – voluntary framework to manage AI risks responsibly.

- Cyber.gov.au guidance – advice on secure AI use and threat mitigation.

- OWASP AI Exchange – community-driven standards and testing guidance for AI security.

Guidance on secure AI development

- Deploying AI securely – guidance on secure AI system deployment.

- Secure design – principles for building AI systems with security by design.

- AI data security – best practices for deploying secure and resilient AI systems.

Certification readiness

- ISO/IEC 42001:2023 – AI management systems standard for trustworthy and responsible AI.

Ashwin Pal

Want to learn more?

For a free and confidential discussion, contact Ashwin Pal on

02 8226 4500 or email ashwin.pal@rsm.com.au

Best practice guidance for using an AI risk matrix

An AI risk matrix (combined with a risk register) is most effective when it’s simple, clear, and actionable. Teams must understand:

- The risks.

- The controls in place.

- Their role in managing them.

Education and ongoing monitoring are key to ensure the matrix and register remain relevant and effective into the future.

Tools, templates, and resources for AI risk assessment

To help your leadership team assess AI-related risks, we’ve put together:

- An AI risk assessment checklist.

- A risk matrix example.

- A risk consequence table.

- A template for recording these risks in your risk register.

Download now

How RSM can assist with AI risk

RSM provides a range of AI-related risk services, such as:

- Creation of AI policies and use cases.

- Assessment of AI systems.

- Evaluation of governance frameworks.

- Application of a risk matrix for AI.

- Use of other risk management tools and frameworks for AI-related risks.

Our specialist Risk Advisory team has extensive experience in AI strategy and implementation and can identify vulnerabilities and advise on mitigation strategies.

We also support leadership teams in making informed decisions on AI governance and oversight – helping to ensure AI initiatives are safe and strategically aligned.

Frequently asked questions

Start by identifying all potential AI risks including operational, data, security, and compliance risks. Assess each risk for likelihood and impact, then map them in a visual matrix. Begin with inherent risks, implement suitable controls, and create a second view that shows the remaining risk. Keep the matrix simple, clear, and regularly updated as AI systems evolve.

Focus on risks with the highest potential impact to your organisation. This often includes exposure of personal or sensitive data, operational failures such as AI hallucinations, biased outputs, and legal or compliance breaches. Prioritising these ensures resources are directed to areas that could cause the greatest harm.

Yes. Key frameworks include the AI Ethics Principles, the NSW Artificial Intelligence Assessment Framework, and VIC generative AI guidance.

For commercial or international standards, refer to the NIST AI Risk Management Framework, OWASP AI Exchange, and ISO/IEC 42001:2023 for AI management systems. Links to all of these frameworks can be found above.